What Is Neural Machine Translation and What Is all the Fuss?

Online translators such as Google Translate can be found all over the web – providing instant translations for anything from product descriptions on Amazon to posts on LinkedIn. On the surface, they’re simple tools that are easy to use.

But a look under the hood reveals a different story – one of a technology so complex that only a few insiders understand it. Say hello to neural machine translation (NMT).

What is neural machine translation you ask? Read on to find out as we unravel this mystery in plain and simple terms.

Neural machine translation architecture

In 2017, a team from Google published a ground-breaking research paper called Attention Is All You Need.

We’ll explain what this enigmatic name means later, but the key thing here is that this paper changed the way machines understand human language forever.

Not only that, it also paved the way for the dominant model in machine translation today: the transformer model. As it happens, this model is also the architecture behind large language models such as ChatGPT (GPT stands for generative pre-trained transformer).

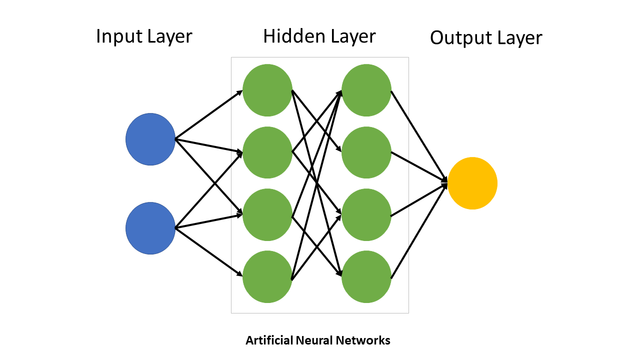

The transformer model is based on a neural network made up of thousands or millions of artificial neurons. Here’s a simplified diagram of what these networks look like:

The input layer neurons act as receivers for external stimuli, which in our case is the text we want translated. The output layer neurons, on the other hand, deliver the desired result of the calculation – the translation.

Meanwhile, inner layer (or hidden layer) neurons function by receiving signals only from other neurons within the network. Their interactions are determined by the “weight” of their connections, signifying the strength of these connections.

A neural network with a large number of inner layers is called a deep neural network. These networks are specifically designed for deep learning tasks, allowing them to tackle particularly complex problems.

To give you some context, Google’s NMT architecture utilizes 8 layers, while GPT-4, the foundation of ChatGPT’s latest version, boasts a whopping 120 of these layers.

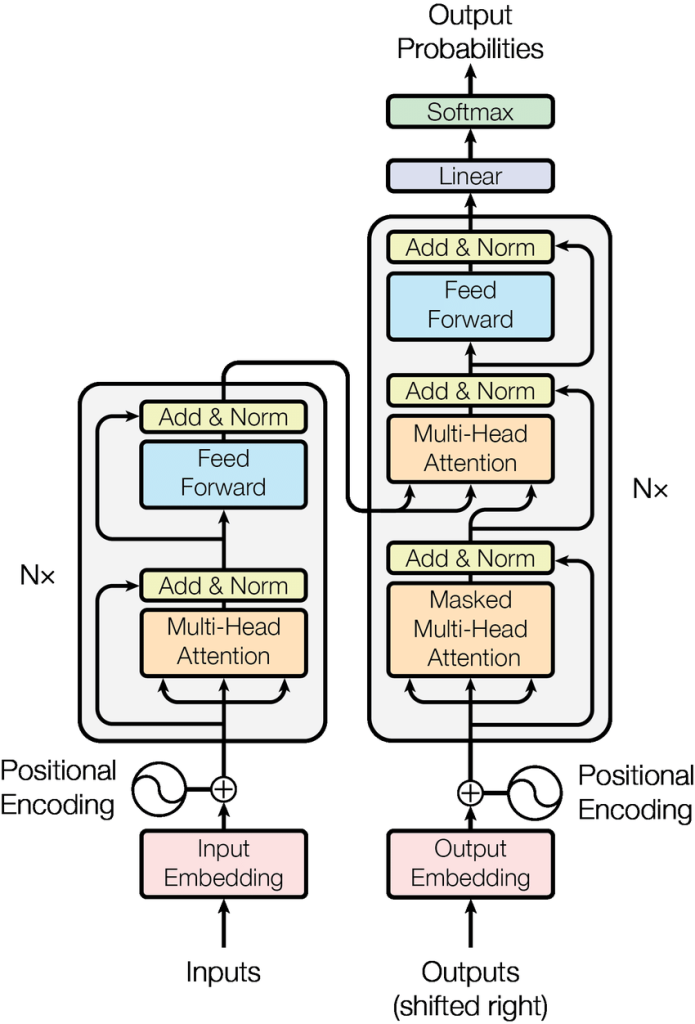

But let’s turn our attention back to the transformer model. Here’s a more detailed overview of this architecture:

Don’t worry, you don’t have to understand what everything means here. All you need to know is that this is an encoder-decoder architecture, which is connected by an attention mechanism.

What does this all mean? And how does this system translate text? Let’s take a look.

How exactly does neural machine translation work?

Vectors: The building blocks of neural machine translation

First up, we have the input layer, which is connected to the encoder.

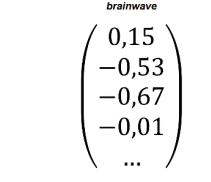

We need to start by converting the text we want to translate into a “language” the machine can read. Specifically, numbers. Even more specifically, vectors. The image below shows how the word “brainwave” is represented as a vector:

Source: Krüger: Transformer-Architektur

As you can see, a vector is a series of numbers arranged in columns. Each number within the brackets represents a vector dimension.

This type of vector is also called a word embedding. Think of it as the meaning of a word you would find if you looked it up in a dictionary.

The more dimensions a vector has, the more nuances of the meaning of a word it can capture. Large neural networks can contain hundreds or even thousands of these dimensions for each word.

How does NMT place words into the right context?

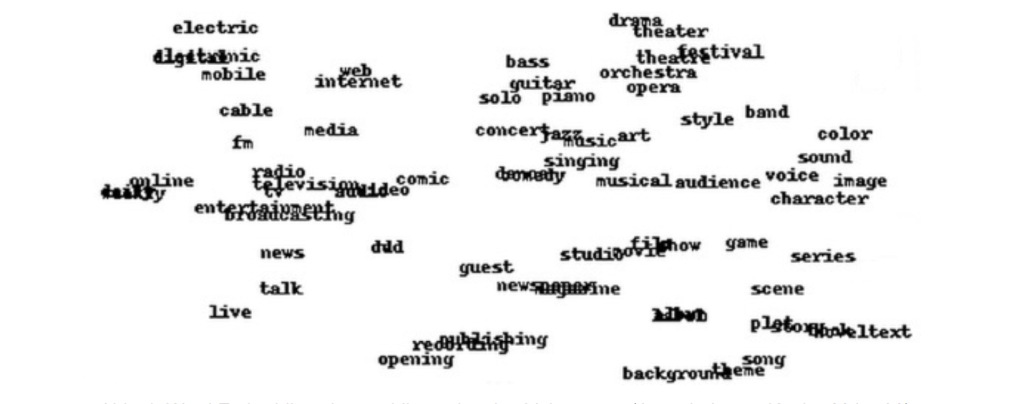

Words that have a similar meaning or appear in the same context are grouped near to one another in this vector space and form clusters:

Transformers contain an impressive amount of semantic knowledge. But it’s nowhere near enough to create good translations. This is because they are still missing information about the context.

Take a look at the following sentence:

I listened to my favorite Beatles track on my way home yesterday.

People intuitively know that “track” here is a song and not a racetrack. This can be inferred from the contextually related words “listen” and “Beatles”.

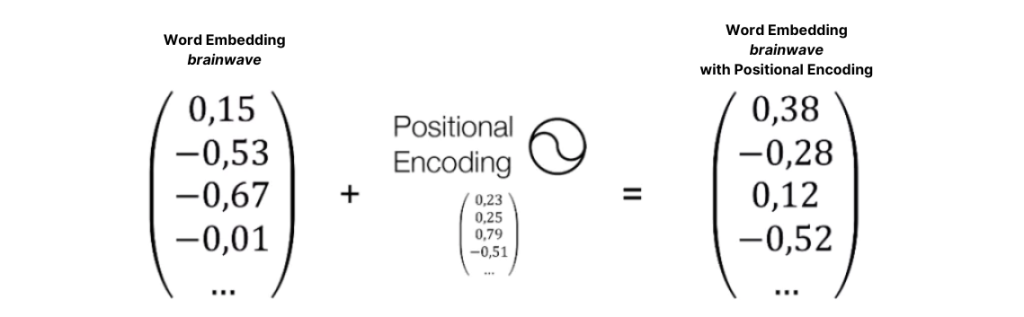

Neural machine translation needs this context too. We provide this by adding “positional encoding vectors” to our word embeddings. These contain information on the word order and position of the individual words in the text we want to translate.

Let’s take a moment to explain what these numbers mean. As we can see, each vector dimension has a number between –1 and 1. In the example on the right for instance, these numbers are 0,38, –0,28, 0,12, and –0,52.

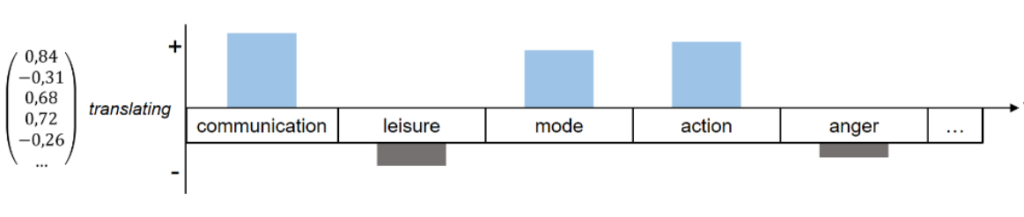

The higher the value, the more similar the two word vectors or words are. The lower the value, the less similar they are. Here’s an example to make this easier to understand:

In the diagram above, the word “translating” (on the left) is semantically related to words such as “communication” and “action”. This proximity is also reflected in the positive vector dimension values for “communication” (0,84) and “action” (0,72), both of which indicate a semantic match.

Words such as “anger” (–0,26) or “leisure” (–0,31), on the other hand, have far less to do with translation, which is reflected in the negative value.

How do vectors turn into a usable translation?

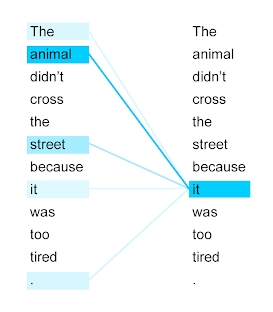

Attention is the magic word. This mechanism decides what the machine needs to focus on during the translation process. More precisely, it looks at all the words in the input sequence and analyzes how relevant these words are for processing the current word.

The attention mechanism identifies that “it” refers to “animal” rather than “street”. (Source: Google Research Blog)

The attention mechanism runs multiple times in parallel. Combining different attention distributions creates a contextualized representation of the input sequence that is more precise than a single attention distribution.

Now it’s time for the final step: generating the target text. This is done sequentially – word for word.

The vector representation of the source text is used to generate a “probability score” for each possible target word in the translation. Words that have already been translated are also factored in when the text is generated. This is known as an auto-regressive process and is vital to producing accurate translations.

A fraction of a second and several million calculations later, ta-dah we have a finished translation!

Why is neural machine translation so revolutionary?

The transformer architecture is the undisputed benchmark for machine translation. Here’s why:

- The attention mechanism is better at reflecting nuances in the meaning of the source text. It is also highly adept at “understanding” syntax and language rules. This leads to translations that are generally fluent and virtually free of spelling and grammar errors.

- The machine’s ability to “remember” has improved. Earlier technologies such as recurrent neural networks often struggled with longer sentences. The machine simply forgot what was at the beginning of a sentence, resulting in glaring translation errors. Modern neural machine translation systems reliably and coherently translate longer input sequences.

- Neural machine translation has an impressive ability to generalize. NMT is also excellent at translating sentences that aren’t already in its training data as one-to-one pairs. This is similar to when children are only able to “parrot” what they’ve heard when they’re learning to speak, before eventually going on to form new sentences on their own.

- Transformer models represent a major technical leap forward, as data is processed in parallel rather than sequentially. Previous models saw each word processed individually during translation – a slow and inefficient process. Transformers directly link all the words in an input sequence to each other in one step. This gets the best out of hardware that is designed to process vast quantities in parallel such as graphical processing units (GPUs) and tensor processing units (TPUs).

What are the limits of neural machine translation?

While a transformer can produce feats of linguistic magic, it is still “nothing more” than a talented illusionist.

This is because the machine doesn’t understand text in the way a human does. All a transformer does is calculate the statistical probability of a certain sequence of words, word by word, without grasping the meaning a human would. So, it’s not magic, just simple mathematics.

The linguistic quality NMT produces also often doesn’t hold up on closer inspection. It’s not uncommon for the machine to produce a sort of “Frankenstein” text since the training data it draws on contains a wide variety of material written in different styles. That means you won’t get unique texts with their own personality. This phenomenon occurs even more with less common languages and languages that have very different grammatical structures.

NMT is just as limited when it comes to recognizing context – the system can only work with what it finds in the text. Does the English word “back” refer to the part of the body or UI in a software program that takes the user to the previous page? If the answer isn’t in the text itself, then there isn’t anything else the machine can do about it.

This problem is exacerbated in translation software, where each sentence or text unit is translated in isolation due to the way these tools segment the text.

If a human translator is unsure about context, they look at external reference material or ask the person who wrote the text. Of course, humans can also draw on a much broader knowledge of the world and rely on their cultural intuition. Plus, they’re also familiar with the latest political and social developments that affect the way we use language, which by extension are then often indirectly incorporated into translation.

Another well-known problem with neural machine translation is its lack of predictability and transparency. This is because it’s impossible to definitively understand or even directly control how the AI arrives at any given translation suggestion. These systems are also inconsistent when it comes to translating terminology, which is a problem in regulated industries or where strict quality requirements need to be met.

Despite these limitations, NMT is still extremely useful for companies. Its ability to translate large volumes of text in a short amount of time is unbeatable, thus adding speed and cost-efficiency to the translation process.

A widespread understanding of these benefits led to the establishment of machine translation post-editing, a standardized process that enables companies to ensure that translations generated by NMT are correct and usable.

What are the best neural machine translation systems?

Google Translate is the tool that brought neural machine translation into the mainstream. Other popular and equally good alternatives include DeepL, Microsoft Translator, and Amazon Translate. If you’re looking for the right neural machine translation system for your company, you should check out our definitive ranking of the best AI translator tools.

Outlook

Let’s come back to our initial question: “what is neural machine translation?” While NMT is a milestone in natural language processing, the technology has been stagnating since 2017, when it hit its peak. But that doesn’t mean the age of NMT is over.

The industry’s next step is merging neural machine translation with other AI technologies such as large language models. For instance, Google Translate is already integrating its own generative AI, Google Gemini, to improve the accuracy and fluency of its translations. For companies, this opens the door to new and fascinating ways as to how content can be translated in the future.

Curious about how you can leverage neural machine translation with LLMs to take your business global? Milengo’s team of localization experts have been exploring the integration of these innovative technologies to make translation processes smoother and quicker. Get in touch to find out how we can help you!

Ihr News Roundup zum Thema Lokalisierung

Unser Newsletter liefert brandaktuelle Infos und Tipps rund um Lokalisierung direkt in Ihr Postfach – unterhaltsam aufbereitet von echten Branchenexperten!

Calculate your REAL translation costs

Get free quote